Post-Truth Validator

Veracity assessment workflow applying to the Farewell Address of President Donald J. Trump.

Tools like ChatGPT has definitely been a double-edged sword. On one hand, they‘ve freed up a lot of our productivity, but on the other, they’ve made it way easier to fool people. Now, telling real from fake has become a real headache.

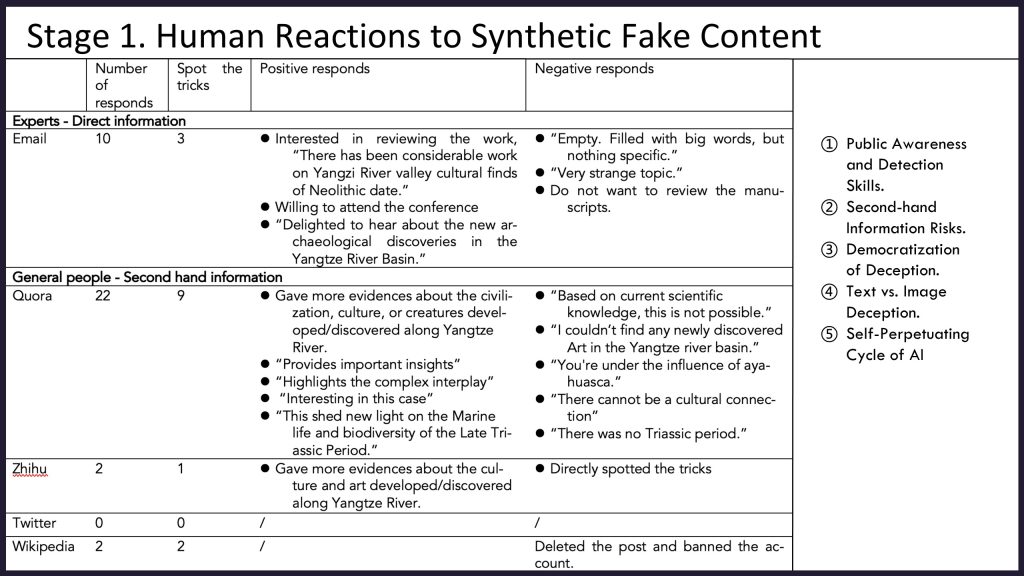

Based on the Lost Yangtze Sea project, we investigate public reactions to AI-created fabrications through a structured experiment. Our findings indicate a profound public challenge in discerning such content, highlighted by GAI’s capacity for realistic fabrications.

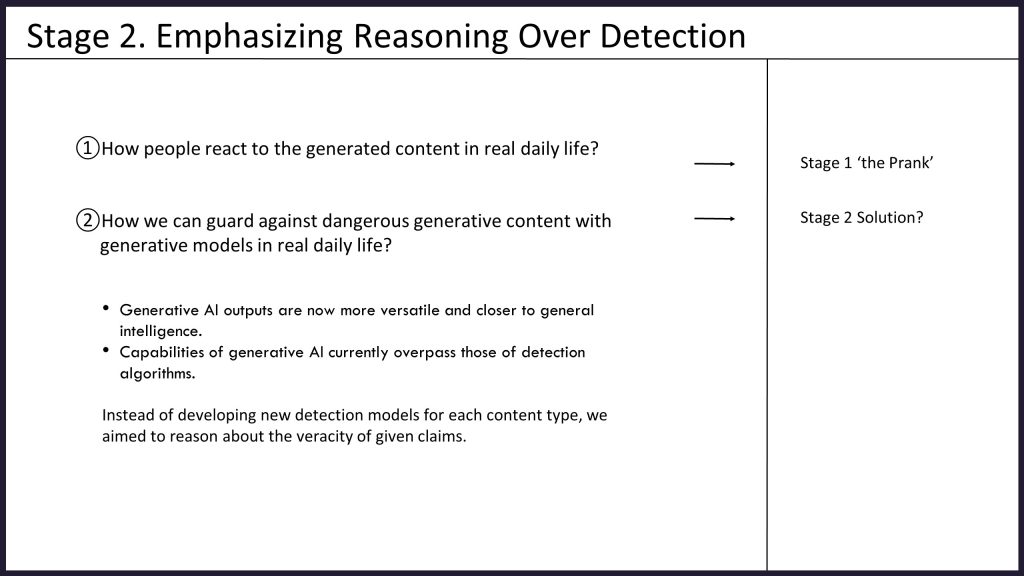

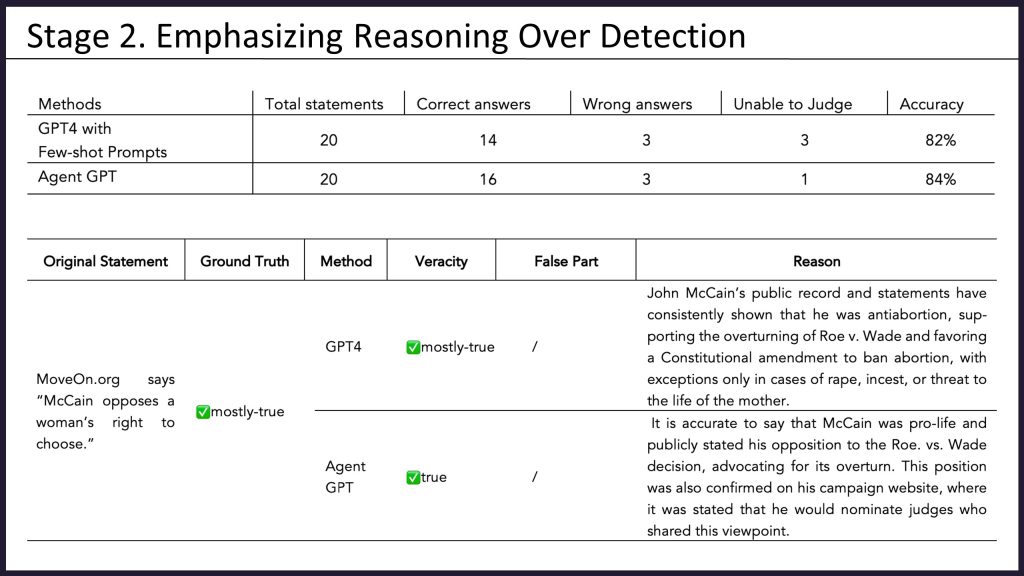

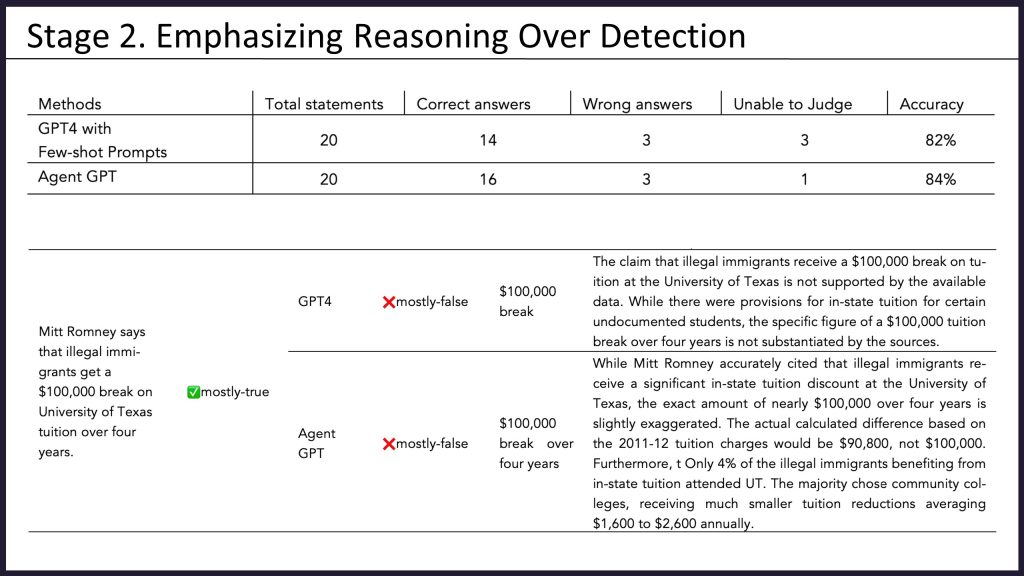

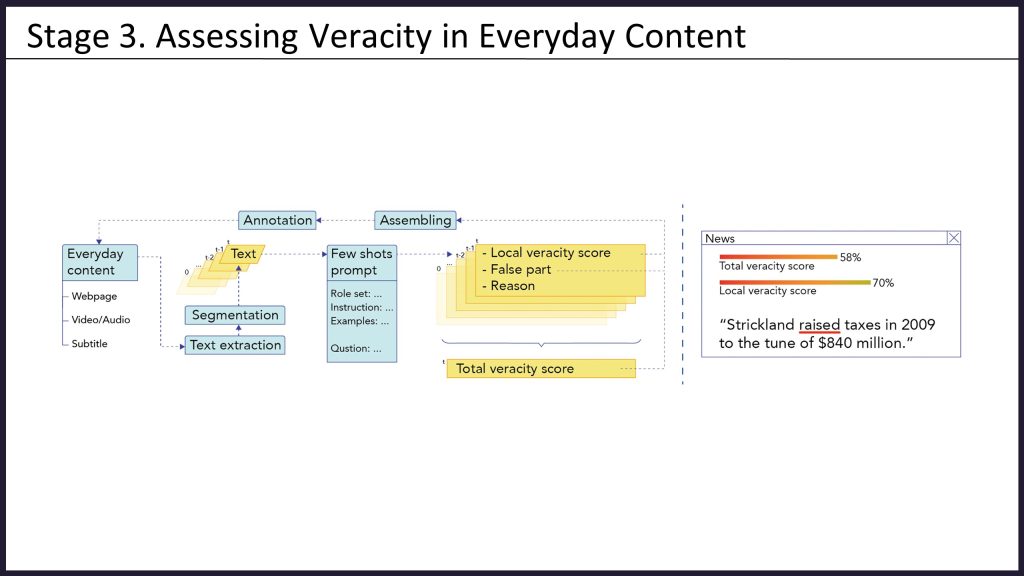

To counter this, we introduce an innovative approach employing large language models like ChatGPT for truthfulness assessment. We detail a specific workflow for scrutinizing the authenticity of everyday digital content, aimed at boosting public awareness and capability in identifying fake materials. We apply this workflow to an agent bot on Telegram to help users identify the authenticity of text content through conversations.

Our project encapsulates a two-pronged strategy: generating fake content to understand its dynamics and developing assessment techniques to mitigate its impact. As part of that effort we propose the creation of speculative fact-checking wearables in the shape of reading glasses and a clip-on.

As a computational media art initiative, this project under-scores the delicate interplay between technological progress, ethical considerations, and societal consciousness.

像 ChatGPT 这样的工具无疑是一把双刃剑。一方面,它们大大提高了我们的工作效率,但另一方面,它们也让欺骗变得更加容易。如今,分辨真伪已成为一大难题。

基于《失落的长江海》项目,我们通过结构化实验研究公众对AI生成虚假信息的反应。结果显示,公众在辨别此类内容时面临巨大的挑战,这突显了生成式AI在制造逼真虚假内容方面的能力。

为应对此问题,我们提出了一种创新方法,利用大型语言模型(如 ChatGPT)进行真实性评估。我们设计了一套详细的工作流程,用于审查日常数字内容的真实性,旨在提升公众识别虚假信息的意识和能力。我们还将这一流程应用于 Telegram 平台的代理机器人,通过对话帮助用户判断文本内容的真实性。

本项目一方面生成虚假内容以研究其传播动态,另一方面开发评估技术以减轻其影响。我们还提出了开发事实核查可穿戴设备的构想,比如投影式阅读眼镜或夹式设备。

作为一项计算媒体艺术实践,该项目强调了技术进步、伦理考量与社会意识之间的微妙平衡。